Data Quality Assessment

Data Quality Assessment can be implemented in many ways, utilising many different software tools. Elait has a specialised skillset that can help your organisation achieve optimal feedback on your quality of data.

By utilising Ab Initio’s Data Quality Assessment Framework, Elait can help with end-to-end implementation and setup. This includes building a standardised Business Glossary which assigns consistent meanings, resolving differences in definitions among regions & lines of business and reference data.

Additionally, by mapping Business Terms and Domain Code set to the technical metadata, any company can link the physical entities such as dataset fields and table columns to Business Terms in its Metadata Hub Business Glossary. Automation features (semantic discovery) accelerate the ability to set up a data quality programs through a centralised Metadata Portal.

- A single data source, such as a database table or file with a particular set of fields.

- Tests for each field that detect when data values are missing or do not meet requirements.

After selection of a source, Validation Rules window can be used to specify tests that detect issues. One can specify standard validation tests such as Not Blank or Minimum for data in individual fields or can write business rules to detect data relationship issues between fields.

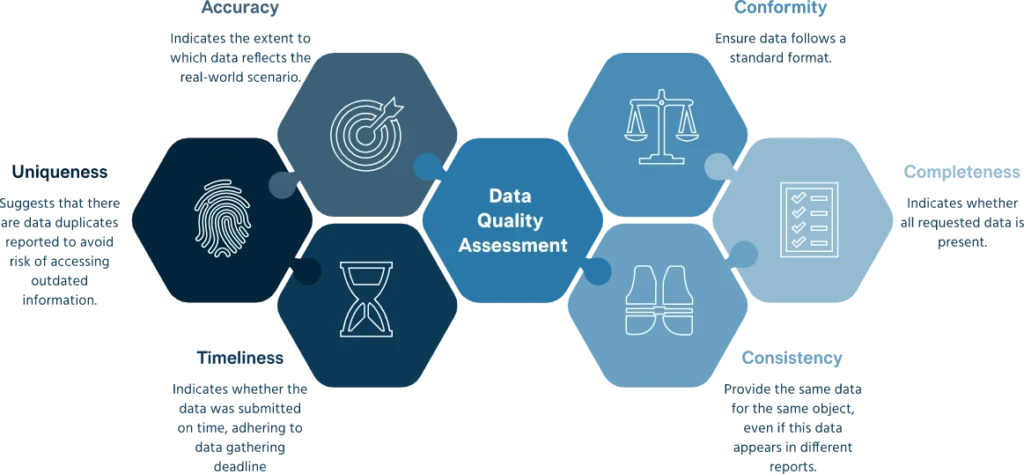

The data quality metric is a criterion to assess data quality over time and to determine how appropriate any given dataset is for a particular business purpose. Metrics such as completeness, conformity, and accuracy give the percentage of records that have no issues. Each of the standard metrics has associated issue codes. Data Integrity assessment combines and correlates the issue detection results with a data profile.

Trusted By

Elait has become our go to team in the Data Governance space. They have made Data Governance process uncomplicated & logical to understand, and practical to implement in our environment.

- Data Steward in Financial company