According to a 2021 Gartner Report, poor data quality alone costs organizations an average of $12.9 million every year. A Dun & Bradstreet report reveals businesses are missing revenue opportunities and losing customers due to bad data practices. Almost 20 percent of businesses have lost a customer due to using incomplete or inaccurate information about them, with a further 15 percent saying they failed to sign a new contract with a customer for the same reason.

Interestingly consequences due to bad data that impact society, economies of nations and businesses are not a new phenomenon. In 1999, an inconsistency of measuring units across the engineering team developing the Mars Orbiter and the NASA team led to a 125-million-dollar loss when the Mars Orbiter failed in its mission.

More recently, data discrepancy caused at a leading Airline company, perhaps due to inadequate investment in processes and systems ensuring data quality, resulted in damaging consequences and conceivably reputational loss. The airline had to apologize to its passengers for charging their tickets in dollars rather than miles, at one dollar per mile. So, a 17,500-mile ticket caused a $17,500 credit card charge. As a result, many customers were over-billed on their credit cards, ranging from $17,500 to $674,000.

To circumvent such familiar instances of slippages leading to questionable & mediocre quality of data getting through the Data Engineering & ETL processes, organisations should invest in robust data assurance programs that consider the operational, data and testing views to ensure quality data is made available to data scientists and analysts.

Case Study : Employing Data Validation to Meet Regulatory Requirements

Meeting regulatory standards requires high-quality data. For example, a bank received an MRA (Matter Requiring Attention) note from the regulator as they did not have confidence in the long-term (30-year) income projections that the bank had made.

Upon investigation, it came to light that the bank had multiple copies of commercial loans scattered across different systems and data warehouses. The income projection model was built on assuming that all copies of data across the systems were the same. But it turned out that the data across the systems were worked upon by individual departments for their outstanding views and reports and therefore compromised. Having identified the systemic gaps in the workflows, processes and authenticating procedures, the officials were able to generate a fresh set of validated projections for the regulator.

Such instances could be avoided if an appropriate testing view was taken to ensure the consistency of data obtained from different sources, increasing the chances of obtaining accurate results. However, there is a multitude of factors that contribute to challenges in ETL Testing. It’s important that businesses should be in the know of these challenges to minimise data loss and duplication.

The Challenges in ETL Testing

In our experience, the outcome assurance programs that promise data quality often regress due to not having a distinct three-dimensional view without measurable instructs.

The three dimensions with some indicative ruptures are detailed below.

Operational View :

- Test Bed Availability: Creating a test bed with a representative sample of data from all the data sources is a challenge faced by most organisations. Not having the right sample set to test against significantly increases the risk of defects leaking into production.

- Lack of compliance with Data Governance Rules: Over time, the source systems change, or the data providers may be replaced while DG policies remain unaltered with no updated revisions.

- Typically, the timestamp is used for incremental load, which could create issues when data is migrated from different time zones.

- Quite often ETL job runs for a few hours daily, and it’s essential to have the appropriate signature signalling ETL completion, to avoid missing records in the event of inadvertent data insertion or update during the process.

Data View :

- Data Volume, Variety & Complexity: Data Volume poses a significant problem in terms of writing up SQL queries to validate the data and then manually reviewing the results in Excel spreadsheets.

- Multiple Data Owners — Data could be from a list of source systems on different technologies, driven by different rules for data governance, and, more importantly, having different masters.

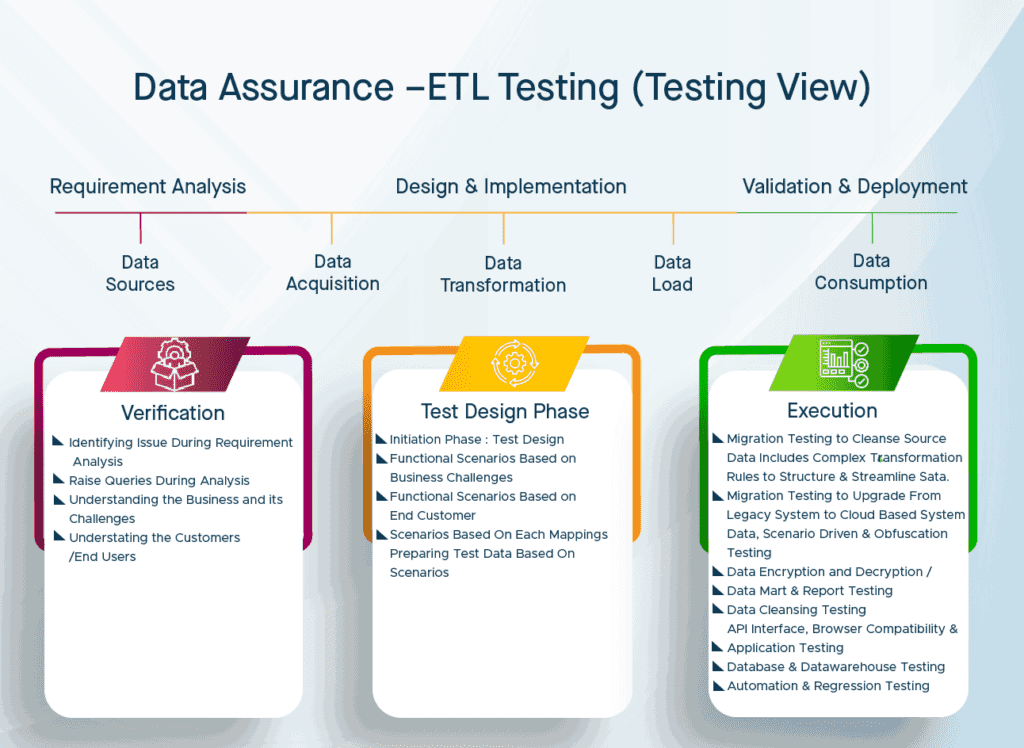

Testing View :

- Data loss during ETL testing.

- Duplicate data and Incompatibility.

- Data accuracy & completeness

- Data standardisation & consistency

- Data integrity & timeliness

- A large volume of historical data from legacy applications & systems makes ETL testing in the target system difficult.

- Restricted accessibility of source information

- Inheriting a broken system with broken data connections, perhaps due to the inclusion of new data formats without impact analysis, increased data velocity & addition of a few features, including new columns, dimensions, and derivatives.

At Elait, our experts propagate the idea and encourage having the above Operational, Data & Testing views to offset challenges. The pictures below give you a detailed understanding of each, along with the progressive tasks & validation through the data journey.

Businesses can ensure the availability of quality data from the ETL process by taking the three distinct data views upfront in the data integration process. This also acts as a preventive measure, as invalid or incorrect data frequently leads to missed opportunities, wasted time, and lost revenue.

It is essential to test and validate data across all stages of data integration, from creation to consumption. Validating data guarantees that all your company’s data is accurate and up to date.

Conclusion

Ensuring the usage of 3-dimensional views in the ETL testing process must be a conscious choice of every organisation where high-quality and curated data is essential. Elait regularly uses this approach as it enables organisations to reach high levels of the data accuracy within their critical data stores.

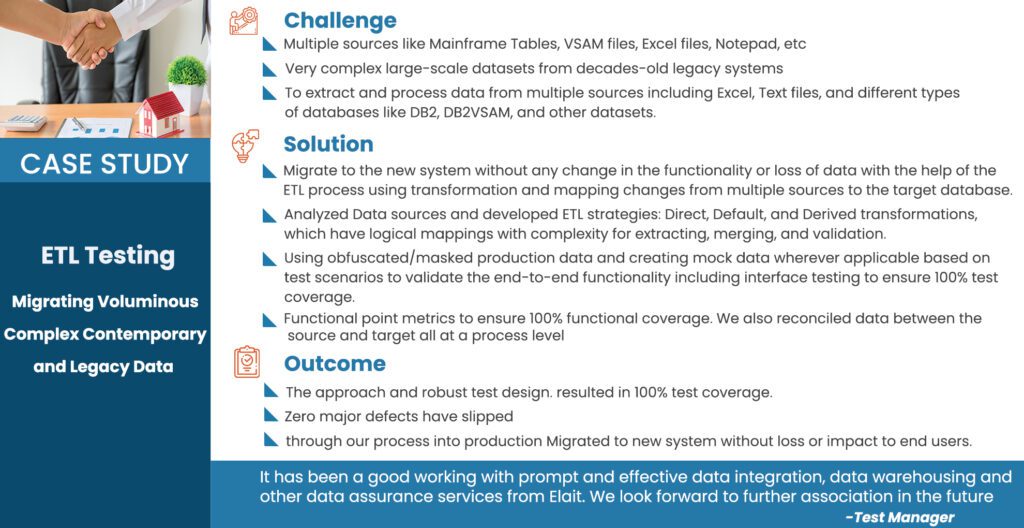

We recently executed an ETL testing assignment for a top global insurance & asset management company involving the migration of voluminous & complex contemporary and legacy data. The representative details are above.

Elait enables organisations to move ahead confidently, adding more effectiveness to the overall strategic decision-making process and maximising the use of data as an asset.

Read this article to learn How Successful Organizations Define A Reliable Data Governance Support Structure For The Precise Management Of Data Assets.

Reference

https://www.gartner.com/smarterwithgartner/how-to-improve-your-data-quality

https://www.forrester.com/report/Build-Trusted-Data-With-Data-Quality/RES83344

https://www.dataversity.net/solving-the-right-problem-a-case-study-in-risk-management/

https://lakefs.io/blog/data-quality-testing/